CX1115 Lab 5: Classification Tree

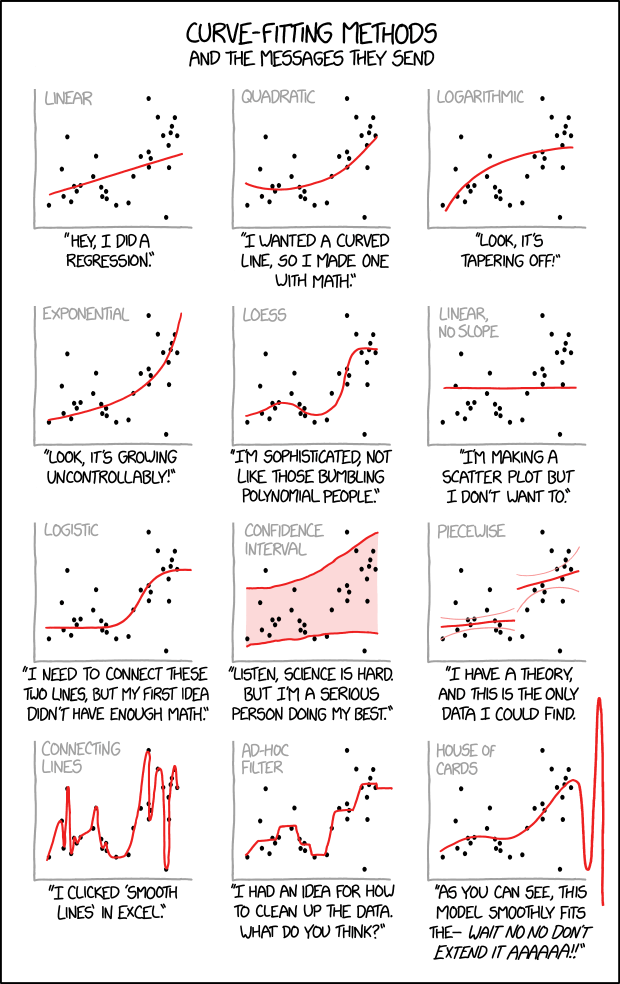

(How do you draw the plot for decision trees?)

Lab 4 Review!

- Split a dataset into train/test split via `train_test_split()`

-

Build a linear regression model with sklearn, along with the Attributes and Functions for the model object:

Building model: .fit(),

Checking model: .intercept_, .coef_,

Using model: .predict()

Analysing model: .score(), mean_squared_error()

Overview 🗺️

(Business) Problem ↔ Data Acquisition ↔ Data Cleaning ↔ Data Exploration / Visualisation ↔ Modelling ↔ Reporting ↔ Deployment- After building some linear regression models, we're ready to build classification models!

- Regression problems can be converted into Classification problems (e.g. predicting up/down instead of the precise closing stock price)

- Generally, regression is a tougher problem.

Classification And Regression Tree (CART) can do both tasks.

2 key learning points in Lab 5

- SwarmPlot: like boxplot but can see actual data points

(but can't see quartiles) -

Build a decision tree with sklearn, along with the Attributes and Functions for the model object:

Building model: .fit(),

Checking model: .plot_tree()

Using model: .predict(), .predict_proba()

Analysing model: .score(), .confusion_matrix()

1. How to use decision trees

"Decision Tree is a 'partition strategy' on the data to get probabilities."

- Refer to plot in class notes - we literally slice the axes into 2 parts with a straight line - what are the implications?

- 1 node in tree = 1 split = 1 line in the plot; 'AND' when go down tree;

left split == True with respect to condition in the node - Most implementations offer only binary splits (not binary classification!) on numeric independent variables. Why?

- Another way to plot your trees + arrows are actually there!

Evaluation Metrics

- Confused about confusion matrix? Check this out

- Accuracy = (TP + TN) / N, what happens if the dataset is imbalanced? Use TPR, TNR, F1, MCC... (precision, recall is confusing!)

2. How do decision trees work?

Goal is to get pure child nodes, i.e. only 1 class. Remove impurities.Grow tree: on train data

- Which variable to choose & how to split the variable?

Categorial, natural splits. If binary split, 1 category can be isolated.

Numeric, sort all values in dataset. Either use the value, or the middle of each pair of values. For both numeric and categorical, try all combinations (or random choice for speed) and compute impurity.

Use Gini or Entropy to compute Information Gain. Not much diff. - Stopping conditions: tree depth, number of samples left in nodes before/after split, min change in impurity

Prune tree: complex trees might have overfitted, tune on val

- Apply penalty on tree complexity (e.g. no of leaves), a form of regularisation such that the final tree is smaller

Lab 5 Deliverables

Check the 'Assignments' tab in the lab's course site on NTULearn.

Remember to submit it within 48 hours after the end of the Lab

(i.e. 18 Feb, 10.30am)

References

Survey for Lab 6! Please let me know what you want to be covered. :)

This set of slides is made using reveal.js. It's really easy to make a basic set of slides (just HTML) and you can consider using it for simple (tech) presentations! For more advanced customization, you do need CSS and JS but scripts can be easily googled for and it has good documentation.

There are also more alternatives here.